This little bugger just so totally rocks!!! IMHO the most compelling aspects are:

- It’s cheap :). They tend to go for around $10-$25. There are still some out there on eBay from time to time (not now) and also Amazon at the moment.

- It’s Radio Frequency technology – so you can zap iTunes to the next song from around the corner or out in the yard!! Even my fancy iMON VFD remote is Infrared based (limited by line-of-site) and that winds up being a deal breaker in my environment… couch faces projector wall away from the PC, IR = major fail! :(

- It’s simple! – there are only the 5 most critical buttons to distract you… none of that typical Windows Media Center remote overload to worry about here… Play/Pause, Previous, Next & Volume Up/Down, that’s it.

Unfortunately, the vendor, Griffin, has chosen to discontinue this little wonder. If you’re interested in driving your PC based Media Players, make sure get the USB version, not the iPod version which appears to still be in production. Take note, the transmitters that come with the readily available iPod version are 100% compatible with the USB receiver. This is a nice way for us to obtain replacement transmitters to have around. Just check eBay… I just got a pair of clickers, including the iPod receiver and an interesting Velcro mount for $4.50, including shipping!!!

Griffin is nice enough to continue hosting their support page with drivers <whew>. These native drivers work on any 32bit Windows since XP (more on 64bit support below).

And Dmitry Fedorov has been keeping the dream alive by showing us how to build our own little application-specific .Net plugins for the basic Griffin driver API.

Ok so that’s all fine and dandy, now let’s get to the good stuff!!

I currently like iTunes and VLC and Windows 7 64bit and I’ve found a couple free wares that well, make beautiful music together (couldn’t resist :)

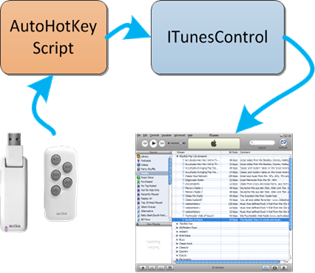

iTunesControl – In his infinite wisdom, Mr. Jobs hasn’t seen fit to support *global* Windows Media Keys in iTunes … fortunately for us, Carson created iTunesControl. Within the HotKeys section, one must simply click the desired key event (e.g. “Next Track”) and then press the corresponding AirClick button to make the assignment (Don’t forget to hit the Apply button). It also provides a very nifty, super configurable Heads Up Display that I absolutely love. To be more specific, I only mapped Play/Pause, Next & Previous this way. I left volume up/down defaulted to Windows global volume which provides convenient system wide volume control no matter what’s active (see last paragraph).

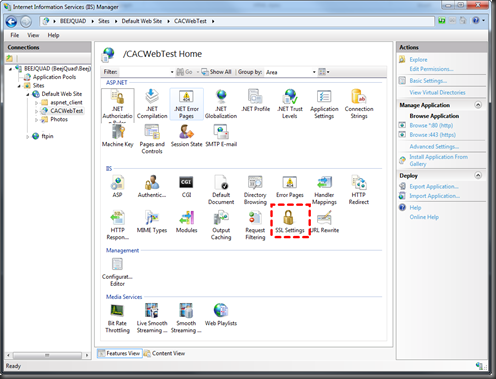

Now, the dark clouds started rolling in when I upgraded to Win 7 64bit and realized that the basic Griffin software install does not happen under 64bit, zip, nada, no-go <waaahh>… then I found this next little gem, affectionately called…

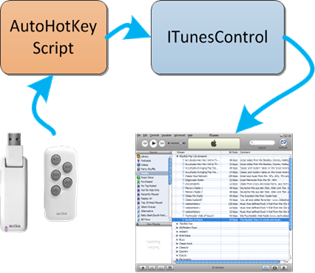

“AirClick Interface Script” - The way Jared explains it, fortunately for us, at least the

HID layer of the Griffin driver is operational under 64bit. So he wrote an

AutoHotKey script which picks up on the HID messages coming from the AirClick and turns those into Windows Media Keys. The WinMedia Keys are then caught by iTunesControl and iTunes immediately does our bidding, brilliant! Jared provides his original script source as well as a convenient compiled exe version that you just run and go.

NOTE: Jared’s script maps a 4 second press of the volume-down to PC go night-night. To me this isn’t so handy and I much rather have a repetitive volume adjust when held down. So I tweaked his script a little,

find that here (

ready-to-run EXE version). If you wish to run this raw script or perhaps compile some of your own tweaks, then you

must use the original AutoHotKey. The newer “AutoHotKey_L” branch would not work for me.

The last thing I’ll mention is subtle but neato… Jared’s script actually checks to see which window is active. If none of the well knowners is focused (VLC, Winamp, MediaPlayerClassic, PowerDVD), then it defaults to firing Windows Media Key events. The nice thing is, if say VLC is active, then Jared’s script fires VLC specific play/pause, rewind & fast forward keys … so if I’m bouncing around the house, iTunes is getting the WinMedia events… if I’m sitting down watching a movie, I just have to make sure VLC is the active window and iTunes is left alone, perfectly intuitive!

UPDATE 10 March 2012

It’s a nice pastime to watch a photo slideshow while listening to tunez. Previously I’d been using the Google Photo Screensaver. But we soon wanted the ability to back up and stare at one of the slideshow photos, via the remote. I found Photo Screensaver Plus by Kamil Svoboda to fit very well. Among other very robust functionality, it supports left cursor to back up in the photo stream and space to pause the slideshow. With that, I edited my new AutoHotKey script (exe) to provide the following:

- when slideshow screensaver is not running, hold down play/pause remote button to start up screensaver slideshow

- when slideshow is running, reverse button goes to the previous image and pauses the slideshow

- when slideshow is paused, play/pause restarts the slideshow

- otherwise all buttons pass through to media events as usual

I really like how you can dual purpose the buttons depending on the context… that’s powerful.

Kamil’s screensaver also provides a hotkey to copy the current image to a favorites folder, very cool. And a hotkey to edit the image’s EXIF metadata – Name, Description & Comment. The nifty thing there is we also publish our photos via a Zenphoto web image gallery. Once we edit the EXIF info in the screensaver, a little PowerShell script of mine refreshes ZenPhoto’s MySQL database entry for that image so the new image name and comments are immediately available for viewing and SEARCHING via the web gallery’s search facility, nice! The PowerShell script uses Microsoft’s PowerShellPack to provide effortless FileSystemWatcher integration. We really do have everything we need to mix and match unintentional building blocks into our own satisfying hobby solutions these days with amazingly little effort. I mean, who could’ve anticipated using a screensaver of all things as a data entry front end?

Hot Corners - This free tool does the job and AutoIT source code is provided.